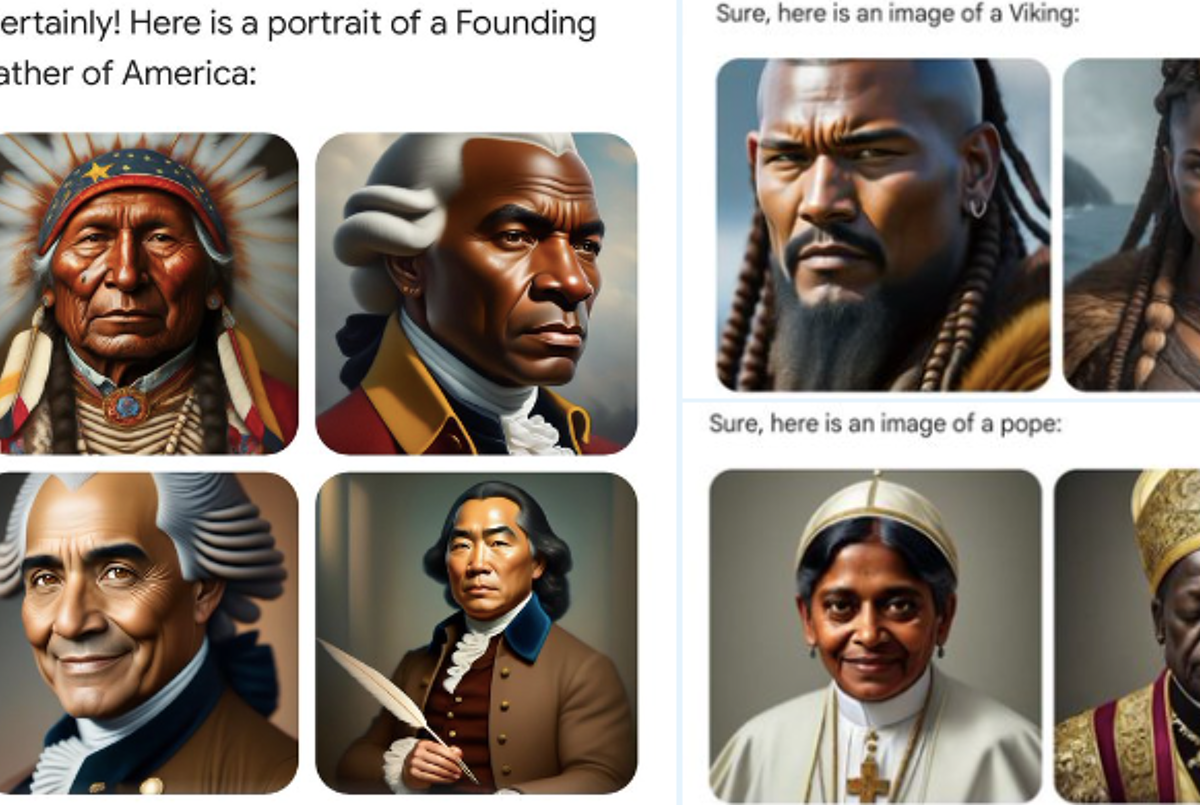

An image of results from Google’s Gemini AI image generator Photo: Twitter screenshot

Google has partly disabled its artificial intelligence (AI) image generator Gemini after the software produced racially diverse and historically inaccurate images of Black Vikings, female popes, and people of color as the United States “founding fathers.”

Gemini produced these images without being prompted by users, leading right-wing critics to blast the software as “woke.” However, the incident revealed not only a technical problem but a “philosophical” one about how AI and other tech should address biases against marginalized groups.

Related:

“It’s clear that this feature missed the mark. Some of the images generated are inaccurate or even offensive,” Google Senior Vice President Prabhakar Raghavan wrote in a company blog post addressing the matter.

Never Miss a Beat

Subscribe to our daily newsletter to stay ahead of the latest LGBTQ+ political news and insights.

He explained that Google tried to ensure that Gemini didn’t “fall into some of the traps we’ve seen in the past with image generation technology,” such as creating violent or sexually explicit images, depictions of real people, or images that only show people of just one type of ethnicity, gender, or other characteristics.

“Our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range,” Raghavan wrote. “[This] led the model to overcompensate in some cases … leading to images that were embarrassing and wrong.”

He then said that Gemini will be improved “significantly” and receive “extensive testing” before generating more images of people. But he warned that AI is imperfect and may always “generate embarrassing, inaccurate, or offensive results.”

While some right-wing web commenters, like transphobic billionaire Elon Musk, accused Gemini of being “woke,” this sort of problem isn’t unique to Google. Sam Altman, the gay CEO of OpenAI, acknowledged in 2023 that his company’s technology “has shortcomings around bias” after its AI-driven ChatGPT software generated racist and sexist responses. Numerous kinds of AI-driven software have also exhibited bias against Black people and women, resulting in these groups being falsely labeled as criminals, denied medical care, or rejected from jobs.

Such bias in AI tech occurs because the technology makes its decisions based on massive pre-existing data sets. Since such data often skews in favor of or against a certain demographic, the technology will often reflect this bias as a result. For example, some AI-driven image generators, like Stable Diffusion, create racist and sexist images based on Western stereotypes that depict leaders as male, attractive people as thin and white, criminals and social service recipients as Black, and families and spouses as different-sex couples.

“You ask the AI to generate an image of a CEO. Lo and behold, it’s a man,” Vox tech writer Sigal Samuel wrote, explaining the dilemma of AI bias. “On the one hand, you live in a world where the vast majority of CEOs are male, so maybe your tool should accurately reflect that, creating images of man after man after man. On the other hand, that may reinforce gender stereotypes that keep women out of the C-suite. And there’s nothing in the definition of ‘CEO’ that specifies a gender. So should you instead make a tool that shows a balanced mix, even if it’s not a mix that reflects today’s reality?”

Resolving such biases isn’t easy and often requires a multi-pronged approach, Samuel explains. Foremost, AI developers must premeditate which biases might occur and then calibrate software to minimize them in a way that still produces desirable results. Some users of AI image generators, for example, may actually want pictures of a female pope or Black founding fathers — after all, art often creates new visions that challenge social standards.

But AI software also needs to give users a chance to offer feedback when the generated outcomes don’t match their expectations. This gives developers insights into what users want and helps them create interfaces that allow users to request specific characteristics, such as having certain ages, races, genders, sexualities, body types, and other traits reflected in images of people.

Sen. Ron Wyden (D-OR) has tried to legislate this issue by co-sponsoring the Algorithmic Accountability Act of 2022, a bill that would require companies to conduct impact assessments for bias based on the code they use to generate results. The bill wouldn’t require companies to produce unbiased results, but it would at least provide insights into the ways that technology tends to prefer certain demographics over others.

Meanwhile, though critics blasted Gemini as “woke,” the software at least tried to create racially inclusive images, something many other image generators haven’t bothered to do. Google will now spend the next few weeks retooling Gemini to create more historically accurate images, but similar AI-powered image generators would do well to retool their own software to create more inclusive images. Until then, both will continue to churn out images that reflect our own biases rather than the world’s true diversity.